Explainable AI: Why Transparency Is Becoming a Core Requirement for Trustworthy AI

As AI influences higher-stakes decisions across industries, explainability is shifting from a technical preference to a governance requirement.

Artificial intelligence systems are no longer experimental. They are embedded in hiring platforms, financial risk models, healthcare decision support tools, cybersecurity systems, and academic technologies. As AI adoption accelerates, a fundamental question is becoming impossible to ignore: when an AI system makes a decision, who is responsible for explaining it?

For years, performance metrics such as accuracy, speed, and scalability dominated AI evaluation. While those measures remain important, they are no longer sufficient. Organizations are now confronting a reality in which AI systems influence high-stakes outcomes that affect people’s livelihoods, safety, and rights. In this context, opacity is not merely a technical limitation. It is a governance risk.

This shift has elevated a concept that, until recently, received limited attention outside academic and regulatory circles: Explainable Artificial Intelligence (XAI).

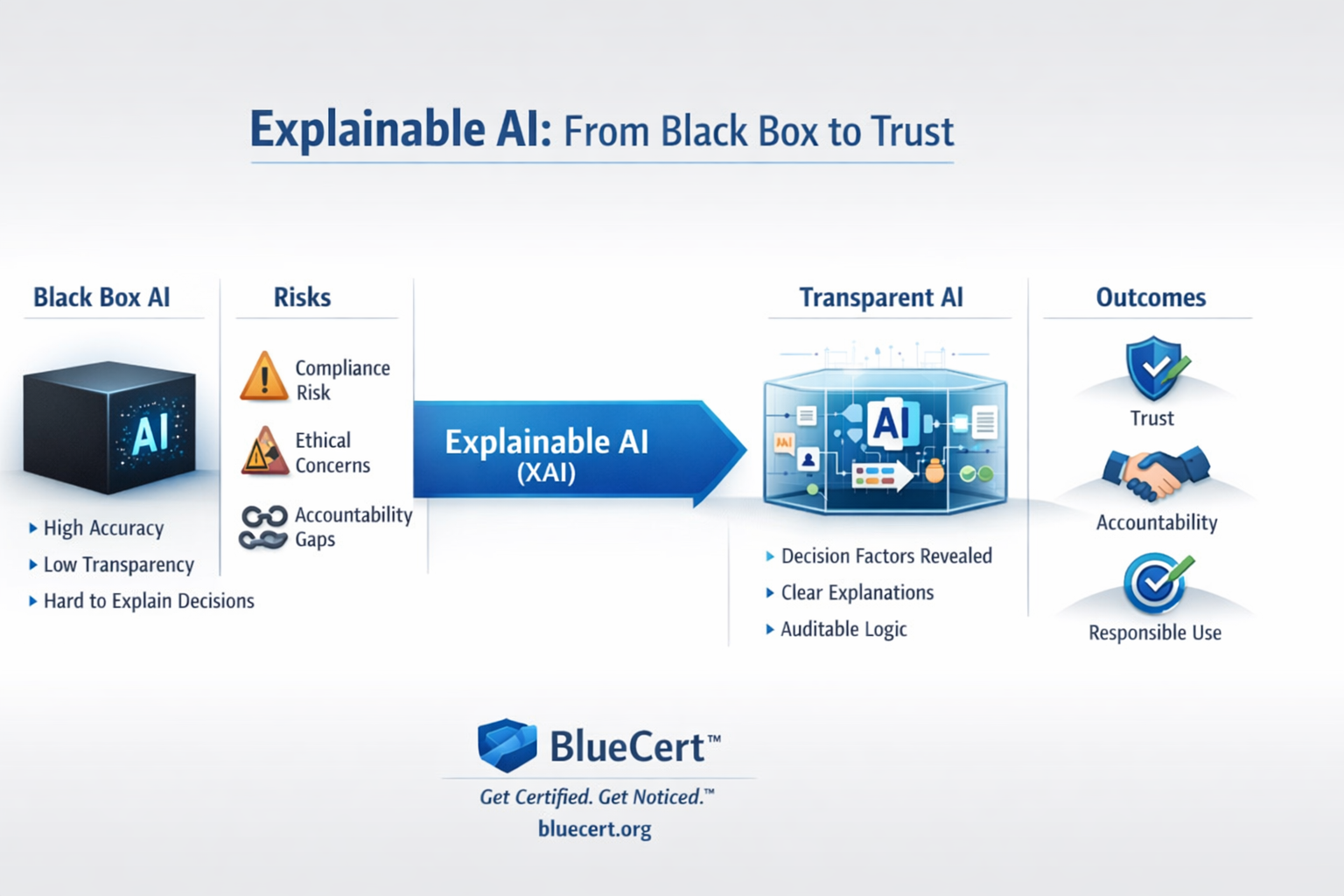

From Black Boxes to Accountable Systems

Many modern AI models, particularly deep learning systems, operate as what are commonly described as black boxes. These systems can produce highly accurate outputs, yet offer little insight into how or why a specific decision was made. For low-risk use cases, this opacity may be tolerable. For high-impact domains, it is increasingly unacceptable.

Consider scenarios where AI systems:

- Recommend whether a loan application should be approved

- Flag potential fraud or cybersecurity threats

- Assist clinicians in diagnosing medical conditions

- Screen job applicants or evaluate academic performance

In each case, the outcome may be defensible statistically, but insufficient from a human or institutional standpoint if it cannot be explained. Organizations are discovering that accuracy without explainability undermines trust, both internally and externally.

Explainable AI addresses this gap by focusing on methods and practices that make AI systems more transparent, interpretable, and accountable to human stakeholders.

What Explainable AI Really Means

Explainable AI does not require every stakeholder to understand the mathematics behind a neural network. Rather, it emphasizes meaningful explanation at the appropriate level.

At its core, XAI enables:

- Insight into which factors influenced a decision

- Justification that can be communicated to non-technical audiences

- Auditing and validation of model behavior

- Detection of bias, errors, or unintended consequences

Importantly, explainability is contextual. An explanation suitable for a data scientist may differ significantly from one required by an executive, regulator, educator, or affected individual. XAI is not a single technique but a discipline that bridges technical systems with human understanding.

Why Explainability Is Becoming Non-Negotiable

Several converging forces are making XAI a necessity rather than a preference.

Regulatory pressure is increasing. Emerging AI governance frameworks worldwide emphasize transparency, accountability, and human oversight. Organizations deploying opaque AI systems face growing compliance risks.

Ethical scrutiny is intensifying. Stakeholders increasingly expect AI systems to be fair, unbiased, and justifiable. When decisions cannot be explained, trust erodes quickly.

Institutional accountability is expanding. Organizations cannot delegate responsibility to algorithms. Leaders remain accountable for outcomes, whether generated by humans or machines.

Operational resilience depends on understanding. Systems that cannot be explained are harder to debug, improve, or defend when challenged.

Together, these factors signal a clear trend: AI systems must be governable, not just powerful.

Explainability as a Human Skill, Not Just a Technical Feature

One of the most overlooked aspects of XAI is that explainability is not solely embedded in code. It also depends on the people who design, deploy, evaluate, and oversee AI systems.

An explainable model is only useful if professionals can:

- Ask the right questions of AI outputs

- Interpret explanations critically

- Communicate implications clearly to stakeholders

- Recognize when explanations are insufficient or misleading

This shifts the conversation from tools alone to competency and certification. Organizations need professionals who understand not only how AI works, but how it should be explained, governed, and challenged.

XAI and the Future of Professional Certification

As AI becomes integral to organizational decision-making, certification frameworks are evolving to reflect new expectations. Knowledge of explainability, transparency, bias mitigation, and governance is becoming essential across roles. This is true not only for data scientists, but also for leaders, auditors, educators, and policy makers.

BlueCert certifications are designed around this reality. Rather than focusing exclusively on tools or programming techniques, BlueCert emphasizes conceptual mastery, ethical responsibility, and governance-aligned competencies. Explainable AI is not treated as an isolated topic. It is a foundational principle that strengthens credibility across AI domains.

This approach reflects a broader shift in how expertise is defined. In the AI era, credibility increasingly depends on the ability to justify decisions, not merely to generate them.

Looking Ahead

The future of AI will not be determined solely by larger models or faster systems. It will be shaped by trust, and trust depends on understanding.

Explainable AI represents a maturation of the field. It signals a move away from blind reliance on automated outputs and toward responsible, transparent, and accountable systems that humans can govern with confidence.

Organizations that embrace XAI early will be better positioned to navigate regulatory change, maintain stakeholder trust, and deploy AI responsibly at scale. Those that do not may find themselves struggling to explain not just their algorithms, but their decisions.

In the age of intelligent systems, the ability to explain is becoming as important as the ability to compute.